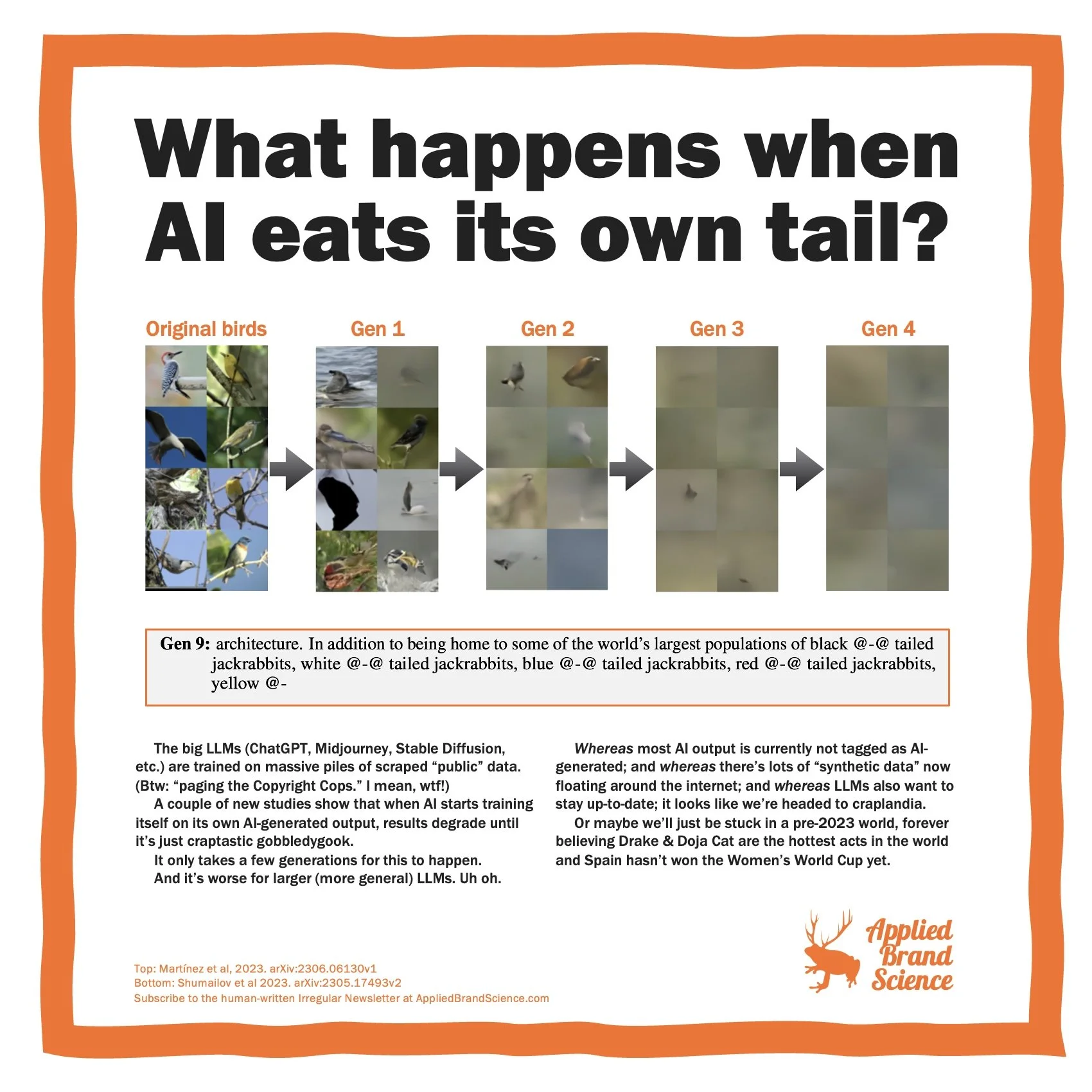

What happens when AI eats its own tail?

The big LLMs (ChatGPT, Midjourney, Stable Diffusion, etc.) are trained on massive piles of scraped “public” data.

(Btw: “Paging the Copyright Cops.” I mean, wtf!)

A couple of new studies show that when AI starts training itself on its own AI-generated output, results degrade until it’s just craptastic gobbledygook.

It only takes a few generations for this to happen. And it’s worse for larger (more general) LLMs.

Uh oh.

WHEREAS most AI output is currently not tagged as AI-generated; and

WHEREAS there’s an explosion of “synthetic data” now polluting the internet; and

WHEREAS LLMs also want to stay up-to-date;

THEREFORE it looks like we’re headed to Craplandia.

Or maybe we’ll just be stuck in a pre-2023 world, forever believing Drake & Doja Cat are the hottest acts in the world and Spain hasn’t won the Women’s World Cup yet.

Some ways out, perhaps:

🔸 Only use tightly-trained (perhaps private) LLMs.

🔸 Look into “classifier-free guidance”.

🔸 Be vewwy vewwy caweful with synthetic data.