Your source for knowledge, reports, thought leadership, and other tomfoolery. No fluff. No folklore. Just sticky, science-backed brand truth.

The Most ABSurd Blog

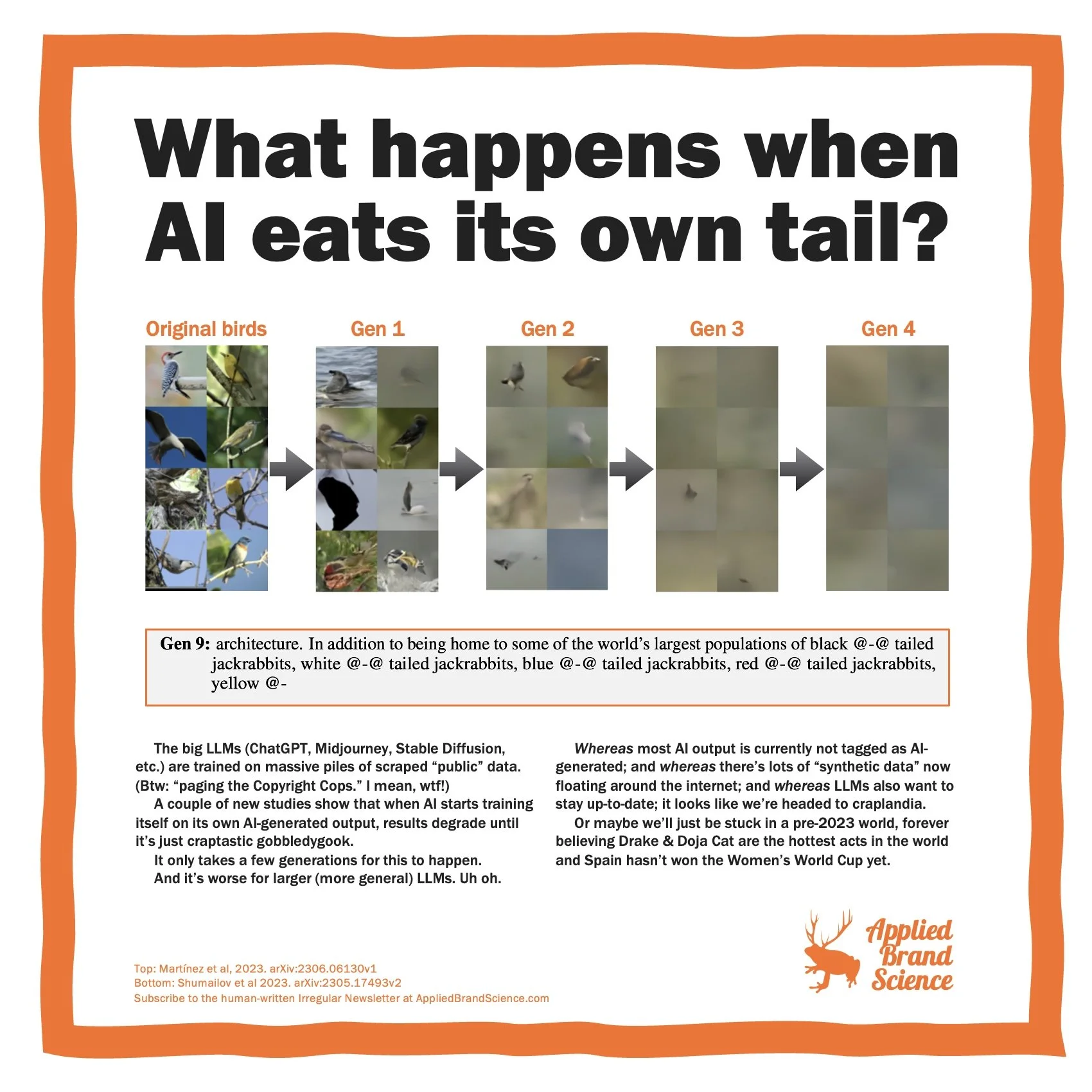

What happens when AI eats its own tail?

When AI starts training on its own AI-made junk, the results spiral from smart to straight-up gobbledygook.

The robot will now write the ads

Can AI really write better headlines than humans — or is it just really good at stealing from Ogilvy’s playbook?