Why do polls fail?

Like, why do political polls seem to be so bad at predicting elections?

There are two parts to the answer.

1: UNACCOUNTED-FOR ERROR

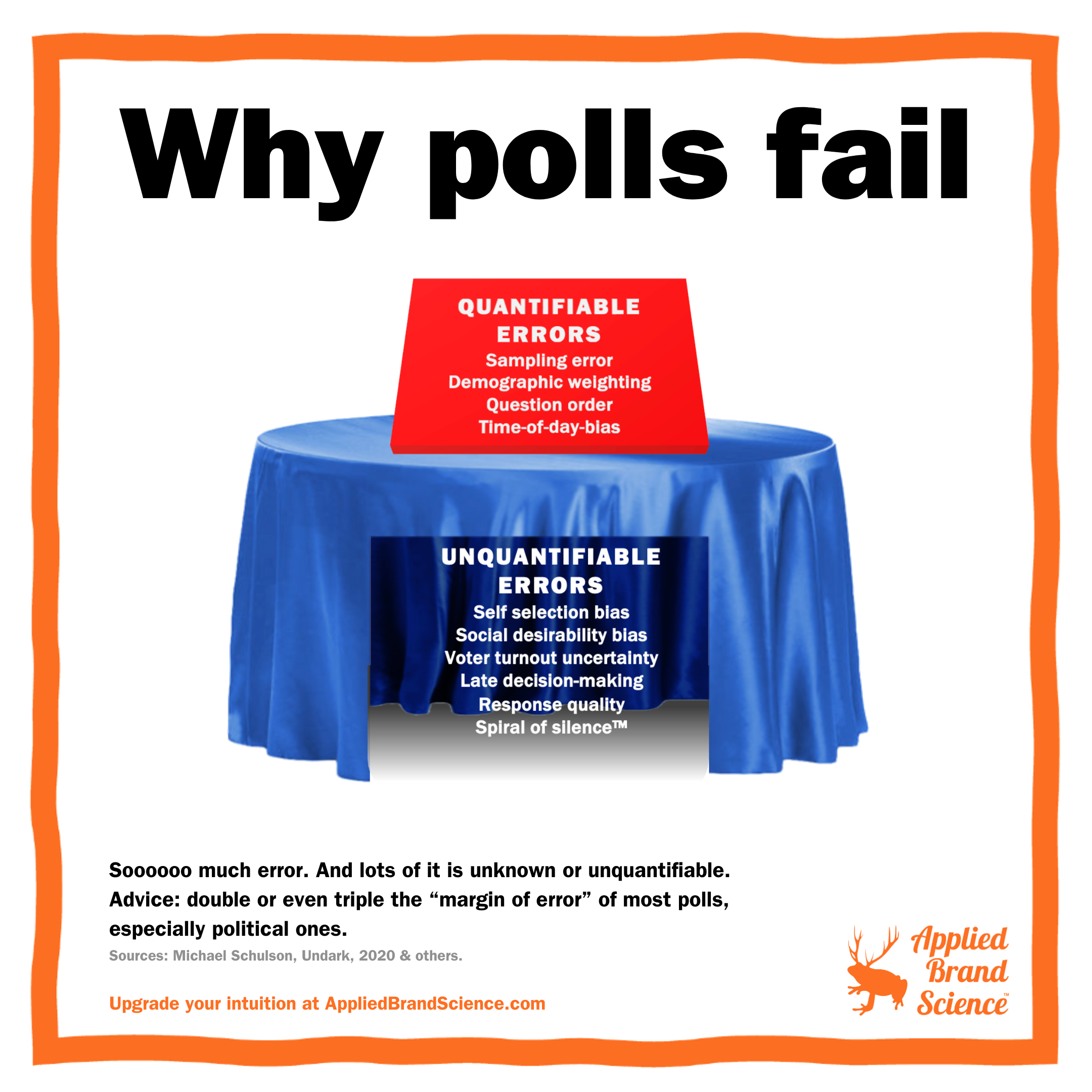

There are lots of sources of error pollsters can & do account for: sampling error, demographic weighting, question order issues, time-of-day bias, etc.

These are quantifiable. They’re often summarized in a “Margin of Error”. You know: sample 1,000 people and there’s a +/- 3% Margin of Error on the results.

But there are other errors that AREN’T accounted for in the Margin of Error.

Stuff like self-selection bias, social desirability bias, voter turnout uncertainty, etc.

These are present in most surveys, but they’re particularly vexing in election polls. And a larger sample usually doesn’t make them go away.

So problem 1 is that these polls are waaaay more uncertain than they seem.

2. OUR EXPECTATIONS OF POLL PREDICTION

The other reason polls seem so bad is that we expect too much of them.

Election polls are more like weather forecasts. When the forecast calls for a 20% chance of rain, and then it rains, we don’t freak out. (Mostly.)

But with election polls, we expect too much. Granted, we’ve been trained by how they’re reported to expect unrealistic precision. But it’s also on us to embrace the uncertainty.

SO SOME LESSONS:

🍊 For election polls, we should probably DOUBLE or TRIPLE the Margin of Error to account for, uh, stuff we can’t account for. 🤪

🍊Since most US election polls are on ~1,000 people, we should assume +/- 8% - 10% on those suckers.

🍊Pollsters should consider reporting their results like weather: with percent chances of someone winning. And ROUNDING those numbers to account for all the invisible error.

🍊As poll readers, we should treat election polls more like weather forecasts and less like crystal balls. (But it’s hard to re-tune your intuition.)

This is a big topic. But these would be nice little steps towards polls not “failing” so much.